NOTE: The Growth Economics Blog has moved sites. Click here to find this post at the new site.

Let me start by saying that all future arguments about robots should use the word “droids” instead so that we can use more Star Wars references.

Benzell, Kotlikoff, LaGarda, and Sachs (BKLS) have a new NBER working paper out on robots (sorry, I don’t see an ungated version). They are attempting to do what needs to be done: provide a baseline model of economic growth that explicitly accounts for the ability of software in the form of robots to replace human workers. With such a baseline model we could then see under what conditions we get what they call “immiserating growth” where we are actually made worse off by inventing robots. Perhaps we could then use the model to test out different policies and see how to alleviate or prevent such immiserating growth.

Thus I am totally on board with the goals of the paper. But I don’t know that this particular model is the right way to think about how robots will affect us in the future. There are several reasons:

Wealth Distribution. The model has skilled workers and unskilled workers, yes, but does not distinguish between those with high initial wealth (capable of saving a lot in the form of robots) from those with little or none. This eliminates the possibility of having wealthy robot owners run the wage down so far that no human is employable anymore. While I don’t think that is going to happen, the model should allow for it so we can see under what conditions that might be right or wrong.

Modeling Code. The actual model of how capital (robots) and code work together seems too crude. Essentially, robots and code work together in a production function. Code today is equal to some fraction of the code from yesterday (the rest presumably becomes incompatible) plus whatever new code we hire skilled workers to write. The “shock” that BKLS study is a dramatic increase in the fraction of code that lasts from one period to the next. In their baseline, zero percent of the code lasts, meaning that to work with capital we have to continually reprogram them. Robotics, or AI, or whatever it is that they are intending to capture then shocks this percent up to 70%.

Is this how we should think about code? Perhaps it is a stock variable we could think about, sure. But is the coming of robots really a positive shock to the persistence of that code? I feel like I can tell an equally valid story about how robots and AI will mean that code becomes less persistent over time, and that we will continually be reprogramming them to suit our needs. Robots, by operating as general purpose machines, can easily be re-programmed every day with new tasks. A hammer, on the other hand, is “programmed” once into a heavy object useful for hitting things and then is stuck doing that forever. The code embedded in our current non-robot tools is very, very persistent because they are built for single tasks. Hammers don’t even have USB ports, for crying out loud.

Treating Code as a Rival Good. Leaving aside the issue of code’s persistence, their choice of production function for goods does not seem to make sense for how code operates. The production function depends on robots/capital (K) and code (A). Given their assumed parameters, the production function is

and code is treated like any other rival, exclusive factor of production. Their production function assumes that if I hold the amount of code constant, but increase the number of robots, then code-per-robot falls. Each new robot means existing ones will have less code to work with? That seems obviously wrong, doesn’t it? Every time Apple sells an iPhone I don’t have to sacrifice an app so that someone else can use it.

The beauty of code is precisely that it is non-rival and non-exclusive. If one robot uses code, all the other robots can use it too. This isn’t a problem with treating code as a “stock variable”. That’s fine. We can easily think of the stock of code depreciating (people get tired of apps, it isn’t compatible with new software) and accumulating (coders write new code). But to treat it like a rival, exclusive, physical input seems wrong.

You’re going to think this looks trivial, but the production function should look like the following

I ditched the  exponent. So what? But this makes all the difference. This modified production function has increasing returns to scale. If I double both robots and the amount of code, output more than doubles. Why? Because the code can be shared across all robots equally, and they don’t degrade each other’s capabilities.

exponent. So what? But this makes all the difference. This modified production function has increasing returns to scale. If I double both robots and the amount of code, output more than doubles. Why? Because the code can be shared across all robots equally, and they don’t degrade each other’s capabilities.

This is going to change a lot in their model, because now even if I have a long-run decline in the stock of robots  , the increase in

, the increase in  can more than make up for it. I can have fewer robots, but with all that code they are all super-capable of producing goods for us. The original BKLS model assumes that won’t happen because if one robot is using the code, another one cannot.

can more than make up for it. I can have fewer robots, but with all that code they are all super-capable of producing goods for us. The original BKLS model assumes that won’t happen because if one robot is using the code, another one cannot.

But I’m unlikely to have a long-run decline in robots (or code) because with IRS the marginal return to robots is rising with the number of robots, and the marginal return to code is rising with the amount of code. The incentives to build more robots and produce more code are rising. Even if code persists over time, adding new code will always be worth it because of the IRS. More robots and more code mean more goods produced in the long-run, not fewer as BKLS find.

Of course, this means we’ll have produced so many robots that they become sentient and enslave us to serve as human batteries. But that is a different kind of problem entirely.

Valuing Consumption. Leave aside all the issues with production and how to model code. Does their baseline simulation actually indicate immiseration? Their measure of “national income” isn’t defined clearly, so I’m not sure what to do with that number. But they do report the changes in consumption of goods and services. We can back out a measure of consumption per person from that. They index the initial values of service and good consumption to 100. Then, in the “immiserating growth” scenario, service consumption rises to 127, but good consumption falls to 72.

Is this good or bad? Well, to value both initial and long-run total consumption, we need to pick a relative price for the two goods. BKLS index the relative price of services to 100 in the initial period, and the relative price falls to 43 in the long-run.

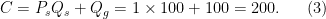

But we don’t want the indexed price, we want the actual relative price. This matters a lot. If the relative price of services is 1 in the initial period, then initial real consumption is

In the long-run we need to use the same relative price so that we can compare real consumption over time. In the long-run, with a relative price of services of 1, real consumption is

Essentially identical, and my guess is that the difference is purely due to rounding error.

Note what this means. With a relative price of services of 1, real consumption is unchanged after the introduction of robots in their model. This is not immiserating growth.

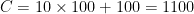

But wait, who said that the relative price of services had to be 1? What if the initial price of services was 10? Then initial real consumption would be  , and long-run real consumption would be

, and long-run real consumption would be  , and real consumption has risen by 22% thanks to the robots!

, and real consumption has risen by 22% thanks to the robots!

Or, if you feel like being pessimistic, assume the initial relative price of services is 0.1. Then initial real consumption is  , and long-run consumption is

, and long-run consumption is  , a drop of 23%. Now we’ve got immiserating growth.

, a drop of 23%. Now we’ve got immiserating growth.

The point is that the conclusion depends entirely on the choice of the actual relative price of services. What is the actual relative price of services in their simulation? They don’t say anywhere that I can find, they only report the indexed value is 100 in the initial period. So I don’t know how to evaluate their simulation. I do know that their having service consumption rise by 27% and good consumption fall by 28% does not necessarily imply that we are worse off.

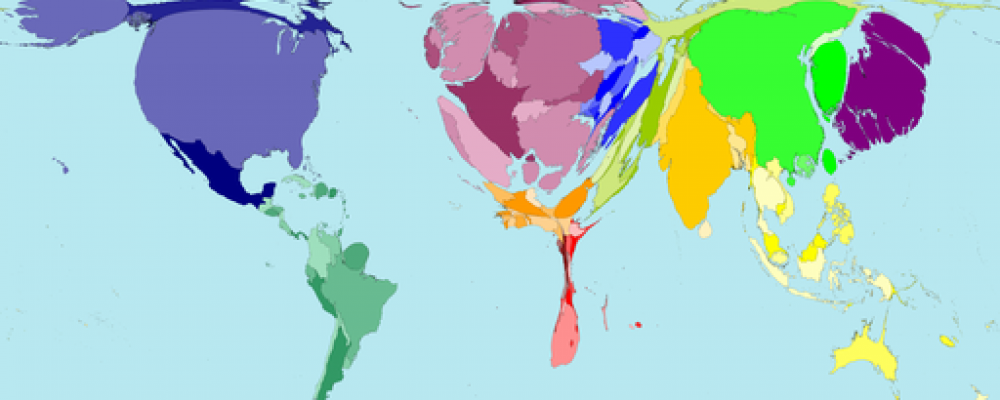

Their model is too disconnected from reality (as are most models, this isn’t a BKLS failing) that we cannot simply look at a series from the BLS on service prices to get the right answer here. But we do know that the relative price of services to goods rose a bunch from 1950 to 2010 (see here). From an arbitrary baseline of 1 in 1950, the price of services relative to manufacturing was about 4.33 in 2010. You can’t just plug in 4.33 to the above calculation, but it gives you a good idea of how expensive services are compared to manufacturing goods. On the basis of this, I would lean towards assuming that the relative price of services is bigger than 1, and probably significantly bigger, and that the effect of the BKLS robots is an increase in real consumption in the long-run.

Valuing Welfare. BKLS provide some compensating differential measurements for their immiserating scenario, which are negative. This implies that people would be willing to pay to avoid robots. They are worse off.

This valuation depends entirely on the weights in the utility function, and those weights seem wrong. The utility function they use is  , or equal weights on the consumption of both services and goods. With their set-up, people in the BKLS model will spend exactly 50% of their income on services, and 50% on goods.

, or equal weights on the consumption of both services and goods. With their set-up, people in the BKLS model will spend exactly 50% of their income on services, and 50% on goods.

But that isn’t what expenditure data look like. In the US, services take up about 70-80% of expenditure, and goods only the remaining 20-30%. So the utility function should probably look like  . And this changes the welfare impact of the arrival of robots.

. And this changes the welfare impact of the arrival of robots.

Let  and

and  both equal 1 in the baseline, pre-robots. Then for BKLS baseline utility is 0, and in my alternative utility is also 0. So we start at the same value.

both equal 1 in the baseline, pre-robots. Then for BKLS baseline utility is 0, and in my alternative utility is also 0. So we start at the same value.

With robots, goods consumption falls to 0.72 and service consumption rises to 1.27. For BKLS this gives utility of  . Welfare goes down with the robots. With my weights, utility is

. Welfare goes down with the robots. With my weights, utility is  . Welfare goes up with the robots.

. Welfare goes up with the robots.

Which is right? It depends again on assumptions about how to value services versus goods. If you overweight goods versus services, then yes, the reduction of goods production in the BKLS scenario will make things look bad. But if you flip that around and overweight services, things look great. I’ll argue that overweighting services seems more plausible given the expenditure data, but I can’t know for sure. I am wary, though, of the BKLS conclusions because their assumptions are not inconsequential to their findings.

What Do We Know. If it seems like I’m picking on this paper, it is because the question they are trying to answer is so interesting and important, and I spent a lot of time going through their model. As I said above, we need some kind of baseline model of how future hyper-automated production influences the economy. BKLS should get a lot of credit for taking a swing at this. I disagree with some of the choices they made, but they are doing what needs to be done. I do think that you have to allow for IRS in production involving code, though. It just doesn’t make sense to me to do it any other way. And if you do that goods production is going to go up, not down, as they find.

The thing that keeps bugging me is that I have this suspicion that you can’t eliminate the measurement problem with real consumption or welfare entirely. This isn’t a failure of BKLS in particular, but probably an issue with any model of this kind. We don’t know the “true” utility function, so there is no way we’ll ever be able to say for sure whether robots will or will not raise welfare. In the end it will always rest on assumptions regarding utility weights.